It was just a “funny” reference to Dune. Since in Dune “the spice must flow” and all that… There is no storyform as far as I know.

I am here at a university, and when I sat down to go on the internet with my new iPad pro for the first time in the secure internet access at this location, I got the scary not trusted words in red on the certificate issued by (etc.) I, immediately, thought of this post I don’t think it is a safari thing, since this has to do with the iPad settings. I have to click on the blue ‘trust’ for the university’s secure portal signup. Maybe, they are having us do some of their work…haha

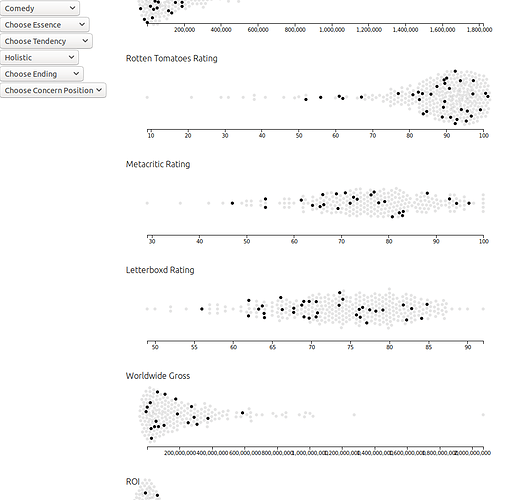

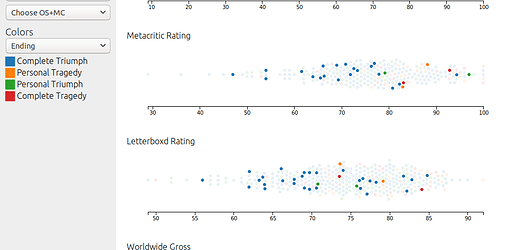

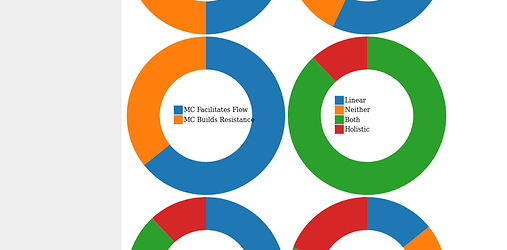

Because I seem to have too much time on my hands, I added the films’ rotten tomatoes and metacritic scores (where available). It is interesting to see how differently some of the story points are apparently valued. E.g. for Romance, Rotten Tomatoes seems to prefer Overwhelming Odds, while the other two prefer Surmountable Odds.

On the other hand, some choices seem to remain clear, e.g for Romance/Love Stories, Complete Tragedy is the least safe for IMDB, RT, and MC, while Personal Tragedy is the most safe for all three.

That siad, there is a problem. Rotten tomatoes has a lot of love for old movies; most of them enjoy 100% and don’t have that many votes. Metacritic otoh doesn’t have a lot of the old movies in the first place.

Here a link for all genre “recommendations”.

Here Romance as an example:

IMDB Rating

- Nature:

- Best: Actual Dilemma (Q1 71.0%, median 73.0%, Q3 76.5%)

- Worst: Apparent Work (Q1 61.25%, median 73.5%, Q3 76.75%)

- Essence:

- Best: Surmountable Odds (Q1 71.0%, median 73.0%, Q3 77.0%)

- Worst: Overwhelming Odds (Q1 69.75%, median 73.0%, Q3 75.5%)

- Tendency:

- Best: MC Builds Resistance (Q1 71.0%, median 73.5%, Q3 77.0%)

- Worst: MC Facilitates Flow (Q1 69.0%, median 73.0%, Q3 77.0%)

- Audience:

- Best: Both (Q1 71.0%, median 74.0%, Q3 77.0%)

- Worst: Neither (Q1 69.5%, median 71.0%, Q3 73.0%)

- Concern_Position:

- Best: top right (Q1 71.0%, median 74.0%, Q3 75.0%)

- Worst: top left (Q1 67.0%, median 71.0%, Q3 77.0%)

- Ending:

- Best: Personal Tragedy (Q1 72.0%, median 73.0%, Q3 74.0%)

- Worst: Complete Tragedy (Q1 65.5%, median 76.0%, Q3 76.5%)

- OS+MC:

- Best: OS_Universe, MC_Psychology (Q1 72.5%, median 75.5%, Q3 77.0%)

- Worst: OS_Physics, MC_Universe (Q1 69.0%, median 71.0%, Q3 74.0%)

Rotten Tomatoes Rating

- Nature:

- Best: Actual Dilemma (Q1 83.0%, median 89.0%, Q3 96.0%)

- Worst: Apparent Work (Q1 55.75%, median 76.5%, Q3 84.5%)

- Essence:

- Best: Overwhelming Odds (Q1 79.5%, median 90.0%, Q3 95.25%)

- Worst: Surmountable Odds (Q1 79.5%, median 87.0%, Q3 92.0%)

- Tendency:

- Best: MC Builds Resistance (Q1 83.0%, median 87.5%, Q3 94.25%)

- Worst: MC Facilitates Flow (Q1 77.0%, median 88.0%, Q3 94.0%)

- Audience:

- Best: Neither (Q1 93.0%, median 96.0%, Q3 96.5%)

- Worst: Holistic (Q1 79.5%, median 85.0%, Q3 93.0%)

- Concern_Position:

- Best: bottom left (Q1 84.0%, median 90.0%, Q3 93.5%)

- Worst: top left (Q1 75.0%, median 80.0%, Q3 88.0%)

- Ending:

- Best: Personal Tragedy (Q1 91.0%, median 92.0%, Q3 97.0%)

- Worst: Complete Tragedy (Q1 62.5%, median 76.0%, Q3 81.5%)

- OS+MC:

- Best: OS_Physics, MC_Mind (Q1 86.5%, median 90.0%, Q3 96.5%)

- Worst: OS_Physics, MC_Universe (Q1 70.0%, median 92.0%, Q3 97.0%)

Metacritic Rating

- Nature:

- Best: Apparent Dilemma (Q1 69.0%, median 79.0%, Q3 86.0%)

- Worst: Apparent Work (Q1 55.5%, median 64.5%, Q3 73.5%)

- Essence:

- Best: Surmountable Odds (Q1 67.0%, median 76.0%, Q3 83.0%)

- Worst: Overwhelming Odds (Q1 66.0%, median 72.5%, Q3 80.25%)

- Tendency:

- Best: MC Builds Resistance (Q1 67.25%, median 74.5%, Q3 82.75%)

- Worst: MC Facilitates Flow (Q1 66.0%, median 72.0%, Q3 81.0%)

- Audience:

- Best: Neither (Q1 80.5%, median 94.0%, Q3 94.0%)

- Worst: Both (Q1 66.75%, median 76.0%, Q3 83.0%)

- Concern_Position:

- Best: bottom right (Q1 69.0%, median 70.5%, Q3 72.75%)

- Worst: top left (Q1 60.0%, median 69.0%, Q3 86.0%)

- Ending:

- Best: Personal Tragedy (Q1 78.0%, median 83.0%, Q3 83.0%)

- Worst: Complete Tragedy (Q1 59.5%, median 66.0%, Q3 76.5%)

- OS+MC:

- Best: OS_Physics, MC_Mind (Q1 71.5%, median 77.0%, Q3 80.0%)

- Worst: OS_Physics, MC_Universe (Q1 66.0%, median 72.0%, Q3 76.0%)

the numbers in the brackets show you the Interquartile Range. 50% of all the concerned movies have a rating between Q1 and Q3 (75% are at least Q1, 50% are at least the median, 25% are at least Q3). Note, we only consider options that have at least 3 examples.

Are you guys interested in anything particular? Maybe I simply haven’t thought of something I could look into.

@bobRaskoph Hi Bob, I didn’t think you would mind… your data is amazing, but a little hard to consume… so I compiled it into a spreadsheet and uploaded it so anyone can download.

I could see Dramatica adding this as an addon feature, where there could be story engine templates for the different “genres”. There could be a “genre” selection that would only limit the dropdowns a little bit but help people stay in the flavor of the movie type they were trying to write.

If someone wants to check my work against Bob’s site data, its possible I made a mistake or two. When the analysis was vaguely presented, I did my best to guesstimate the choices and things to avoid.

EDIT: I added a second tab where the data is sorted by “genre”, and updated the link.

Thank you for your analysis Bob.

Enjoy, everyone.

Just a tiny update of the storyform twins/triplets.

Twos:

Smoke Signals = Thirteenth Floor

Singin in the Rain = Station Agent

Star Wars = Birdman

Looper = Wrath of Khan

Pride and Prejudice = Bridget Jones’s Diary

Dogma = Matrix

What lies beneath = Arrival

Casablanca = Sicko

Terminator = Unforgiven

Constant Gardener = Beasts of no nation

Dead Poets Society = Midnight Cowboy

Annie Hall = Harold and Maude

Eastern Promises = Eddie the Eagle

Star Trek (2009) = Moana

Back to the Future = Shrek

Nightcrawler = In the Heat of the Night

???Mad Max Fury Road ?=? Captain America: Civil War???

Threes:

Bringing Up Baby = Zombieland = Whats up doc

Finding Nemo = Collateral = Pitch Perfect

Limey, The = El Mariachi = Roman Holiday

Almost Famous = Life is Beautiful = Creed

Erin Brockovich = Kung Fu Panda = Hacksaw Ridge

Marty = Queen = Big Sick

???

Romeo and Juliet (Optionlock) ~ West Side Story (Timelock)

Something I’ve noticed: The Filter page for OS Problem of Reaction does not contain “The Queen” for some reason.

I’ve noticed a lot of the newer analyses have not been showing up on filter pages. For example, The Princess Bride doesn’t appear on the filter page for OS Domain of Psychology and Zootopia doesn’t appear on the filter page for OS Domain of Mind. @jhull says this is because the site was built for less than 300 analyses and said he would adjust the site accordingly.

Source: Dramatica Analysis Page

It’s not that it was built for less than 300, I just hardcorded something 'cause I was lazy when I first made it…I will fix it this week. Thanks for reminding me!

No problem!

You’re the best!!! These are great. Thanks for sharing.

Thank you for these meta-analyses. I noticed another “three” after watching the Constant Gardener and thinking “this story seems very similar to Michael Clayton (which was made later).”

The Constant Gardener = Michael Clayton = Beasts of No Nation

Does anyone know if this has a new link address?

I don’t see a link associated with it. Might not an Excel sheet be more useful - something one can sort, and also add to … just an idea.

I found it’s just a broken link, but not offline. Here’s a link for anyone interested…

https://raskoph.lima-city.de/ is the general page, also linking to the Table of Scenes

and

https://raskoph.lima-city.de/dramatica/Dramatica_Analysis/

the specific.

The data used on that page is outdated and, mor importantly, was not not scrutinized thoroughly. I would not rely on the data in there and especially not on the conclusions regarding genres. Even if the methodology had been validated, the sample sizes are way too small.

Furthermore, the colorful plots I shared before, where you could select story points and/or genres to see where certain combinations would lie in time or ratings, I can no longer share because I messed up.

As far as I’m aware, certain genre statistics are available on Subtext. I’m not a subscriber, so I don’t know whether it agrees with anything I wrote or if it’s even all that related.

TL;DR: don’t read the Analysis pages.

I will remove them once I get back home anyway.

Gotta update the stories with the same storyform list:

Old

- Aliens = Blade Runner 2049

- Smoke Signals = Thirteenth Floor

- Singin in the Rain = Station Agent

- Birdman = Star Wars IV - A new Hope (Luke)

- Looper = Star Trek II: Wrath of Khan

- Black Panther = Captain America: Civil War = Lion King = Mad Max Fury Road = Trainwreck

- Bridget Jones’s Diary = Pride and Prejudice

- Dogma = Matrix

- Bringing Up Baby = Whats up doc = Zombieland

- Collateral = Finding Nemo = Pitch Perfect

- Big Sick = Marty = Queen

- Casablanca = Sicko

- Terminator = Unforgiven

- Beasts of no nation = Constant Gardener

- Dead Poets Society = Midnight Cowboy

- Annie Hall = Harold and Maude

- Almost Famous = Creed = Life is Beautiful

- Eastern Promises = Eddie the Eagle

- In the Heat of the Night = Nightcrawler

- Arrival = What lies beneath

- Moana = Star Trek (2009)

- Erin Brockovich = Hacksaw Ridge = Kung Fu Panda

New equivalents:

- Skyfall = Top Gun

- Cars = Galaxy Quest

- Jojo Rabbit = Missing Link = Teen Titans Go to the Movies

- Edge of Tomorrow = Planes, Trains, and Automobiles

- El Mariachi = Limey = Raiders of the Lost Ark (Raiders) = Roman Holiday = Star Wars IV (Han) = Thor 3: Ragnarok

- Jungle Book (2016) = Star Wars V (Han)

- Back to the Future = Die Hard = Shrek

- Beauty and the Beast = Jungle Book (1967)

No surprises this time, unfortunately.

I also made a page where you can see some comparisons (using only the twelve essential questions, though)

https://raskoph.lima-city.de/dramatica/equivalence.php

If you notice any mistakes, let me know

[UPDATE] added these:

- Opposites

- Same except Problem-Solving Style (e.g. Groundhog Day & Lady Bird, Sing! & Elf, The Old Man and the Gun & Little Women)

- Same except Ending (e.g. Sing! & I, Tonya, Home Alone & Sicario)

- Franchise Diversity (i.e. how different the storyforms within one franchise are)

- Multistory Diversity (i.e. how different the storyforms within one narrative are)

- Current Storyforms

You might have noticed that the linked page was broken. This is fixed now. I had to remove the similarity table

I also added “Screenwriter Diversity” and “Director Diversity”, though this is not done yet… I need to fill the table with the screenwriters and directors first

Later on, I will add stuff about the posters that I’ve worked on, though I did not get very far with that yet…

UPDATE:

I added all directors and (screen)writers. You can now compare the “diversity” of their storyforms. The formula for calculating is kind of arbitrary, but for me conveys the impact of certain storyform decisions. 1 means that used every single possible answer for the essential 12 questions, for which you would need at least 64 storyforms. 0 means you only have one storyform. you only need two storyforms with different resolve, growth, approach, pss, driver, outcome, judgment to get 0.5; you only need two different os domains to get 0.25; the other 0.25 are given by limit, os concern, os issue and os problem, with concern > issue > problem = limit.

I’m currently working through the poster stuff.

Does anyone have anything they would like to see statistics on?